The current generation of drug-eluting stents (DES) that are clinically available in the US represent significant advancements compared to angioplasty, bare metal stents, and the first generation of DES. The Xience everolimus-eluting stents (Abbott Vascular), Promus everolimus-eluting stents (Boston Scientific) and Resolute zotarolimus-eluting stents (Medtronic) demonstrate the optimal balance of safety and efficacy. More recently, the SYNERGY platinum-chromium everolimus-eluting stent, a thin strut platform with bioresorbable poly-lactide-co-glycolide polymer, became clinically available and shows significantly lower rates of stent thrombosis than other durable polymer DESs. Despite these advances, the durable metallic platform and/or permanent polymer coating that remains in the arterial wall induces persistent vasomotor dysfunction and sustained inflammation enabling the development of in-stent neo-atherosclerosis.1

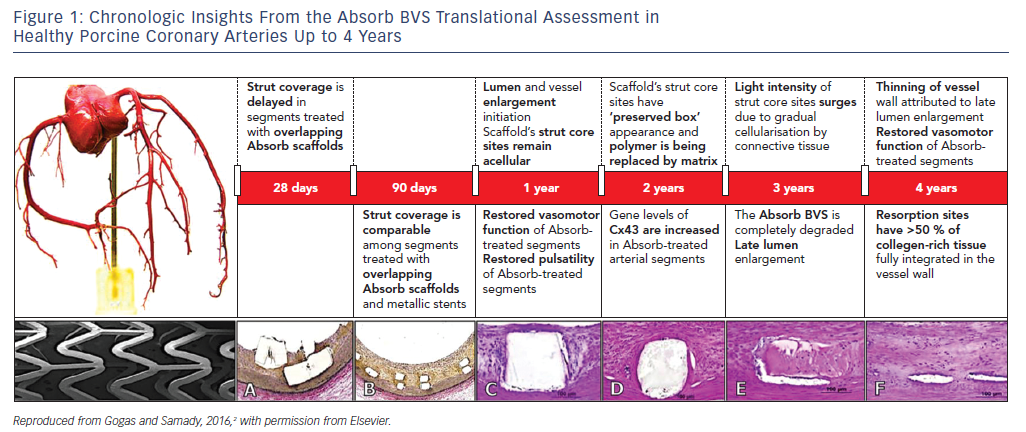

Phenotypic (anatomic and physiologic) as well as genotypic (gene expression) arterial restoration over the course of scaffold’s biodegradation have been described in porcine coronary arterial segments, with translational implications of transforming coronary angioplasty with contemporary stenting to coronary angioplasticity with a bioresorbable scaffold (BRS, Figure 1).2 However, evidence from large-scale randomized trials and meta-analyses has highlighted the lack of any incremental benefit with first-generation BRS for coronary revascularisation due to significantly higher rates of target lesion failure (TLF) and scaffold thrombosis (ScT).3

This article gives an overview of the preclinical/clinical mismatch observed with current generation BRS and expands on the mechanisms of failure of the first-generation ABSORB bioresorbable vascular scaffold (BVS) that led the technology being withdrawn. It discusses the evolving innovations needed to develop scaffolds with more biocompatible physical properties that, under optimal deployment techniques, will provide a suitable alternative to metal stents for coronary revascularisation.

Mismatch Between Experimental and Clinical Observations

Large animals are the standard experimental models for the preclinical assessment of safety and efficacy of coronary stents. BRS such as the first-generation BVS underwent extensive translational in vivo and ex vivo testing with angiographic, invasive imaging and genetic evaluation at different time points over periods of up to 4 years.

The most remarkable example of experimental/clinical mismatch was the discordance in vasomotor responses of non-atherosclerotic porcine coronary arteries and human arterial segments treated with either the ABSORB BVS or Xience V stent. The gradual loss of the scaffold’s mechanical integrity at 1 and 2 years was associated with restoration of the vasomotor responses (constrictive and expansive) following vasomotor challenge with endothelial and non-endothelial dependent agents in large animals.4 In contrast, evidence derived from the ABSORB II randomized clinical trial, where non-endothelial dependent vasoreactivity was investigated, demonstrated greater expansive responses in the cohort treated with a metallic stent at 3 years.5 It has been specualted that the greater interstrut distance of metallic stent rings or late metal strut malapposition associated with expansive remodelling may be the most relevant mechanisms behind this mismatch. Although there was no direct impact on clinical outcomes, this finding certainly raised concerns as to whether vascular restoration occurs in the clinical setting.

The clinical observations related to novel modes of failure with the Absorb BVS that were not observed during animal testing, involving late intraluminal scaffold dismantling and scaffold discontinuity, raised questions over the validity of non-atherosclerotic large animals as suitable models for assessing the safety and efficacy of these technologies. In non-atherosclerotic coronary arteries, the absence of atherosclerotic plaque at the selected site of stent/scaffold deployment eliminates the need for optimal lesion pre-dilatation; and stent deployment requires a stent/artery ratio of at least 1.1:1 to avoid device dislodgement as opposed to the 1:1 stent/artery ratio in the clinical setting. The critical components of optimal lesion preparation (P), 1:1 stent sizing (S) and mandatory pre-dilatation, which improve clinical endpoints when properly applied in the clinical setting, have little significance in the preclinical stage.

Despite these fundamental differences, large animals remain the gold standard for phase I testing of interventional devices.

Inferior Safety with First Generation Absorb Bioresorbable Vascular Scaffold

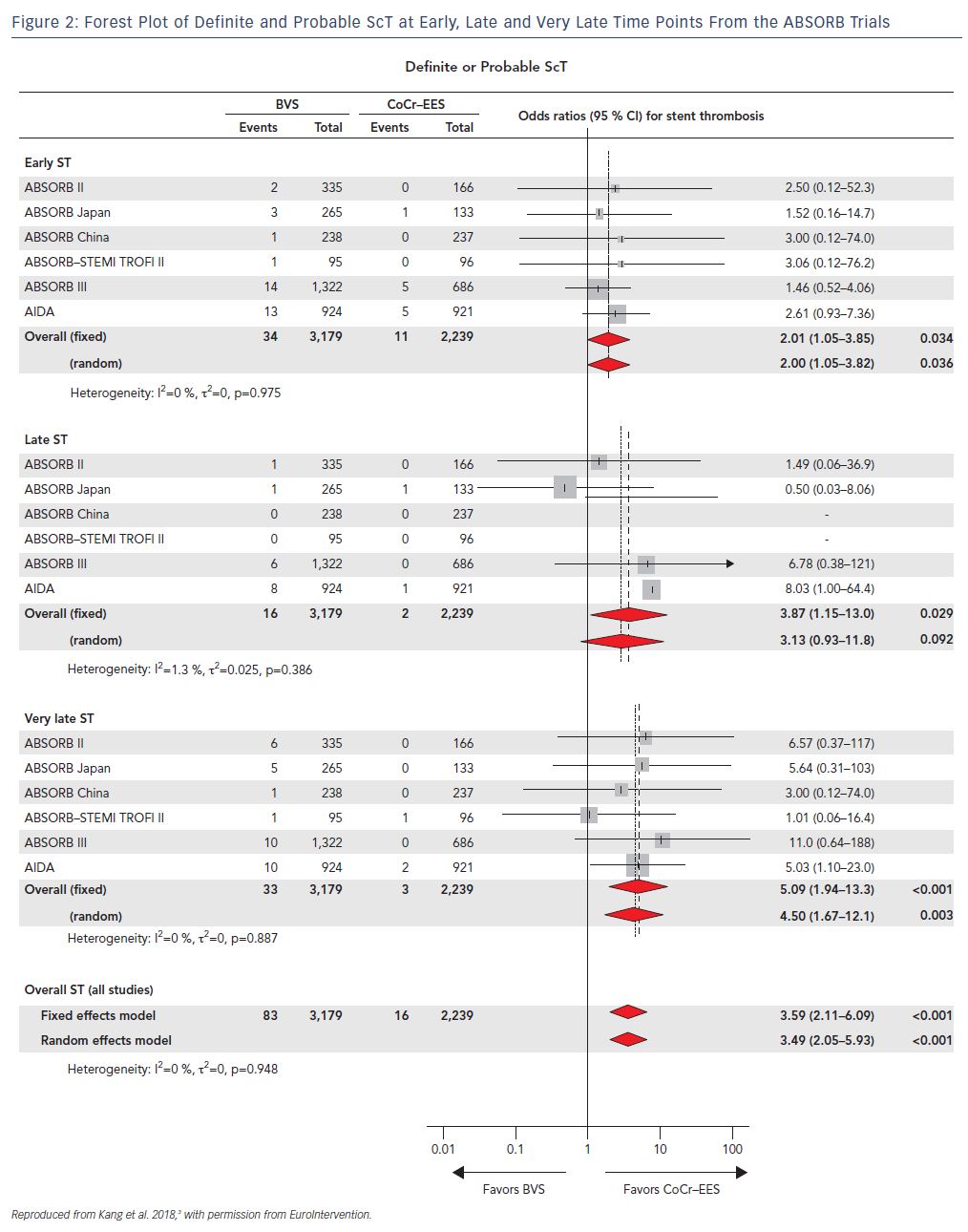

Concerning signs of increased rates of ScT with first generation BVS were initially reported in real-world registries such as the GHOST-EU registry and selected case reports, and were confirmed when the AIDA randomized trial was published.7 In this study, 1,845 patients undergoing PCI were randomized to either the first generation BVS (n=924) or contemporary metallic stents (n=921). The trial was halted and reported at an early stage because of safety concerns related to the threefold increase in the risk of definite or probable ScT over 2 years. Similarly, cumulative evidence from small registries with intracoronary imaging follow-up consistently reported the presence of novel mechanisms of scaffold failure leading to ScT.

Furthermore, in a recent network meta-analysis where the authors analyzed 105,842 patients who had undergone implantation of metallic stents or BRS from 91 randomized trials after a mean follow-up of 3.7 years, ScT rates were consistently and significantly higher in the early (≤30 days), late (31 days–1 year) and very late (>1 year) time points after BVS deployment with the trend increasing after the first year (Figure 2).3

A combination of etiologic factors involving the scaffold’s bulky and non-streamlined strut design, suboptimal deployment techniques and novel modes of scaffold failure were the leading safety problems that caused the technology to be withdrawn.

An examination of these mechanisms of failure below would enable optimal design iteration of the next generation scaffolds with more biocompatible profiles. This should lead to non-inferior clinical outcomes when compared with contemporary DESs.

Non-Streamlined and Bulky Strut Design

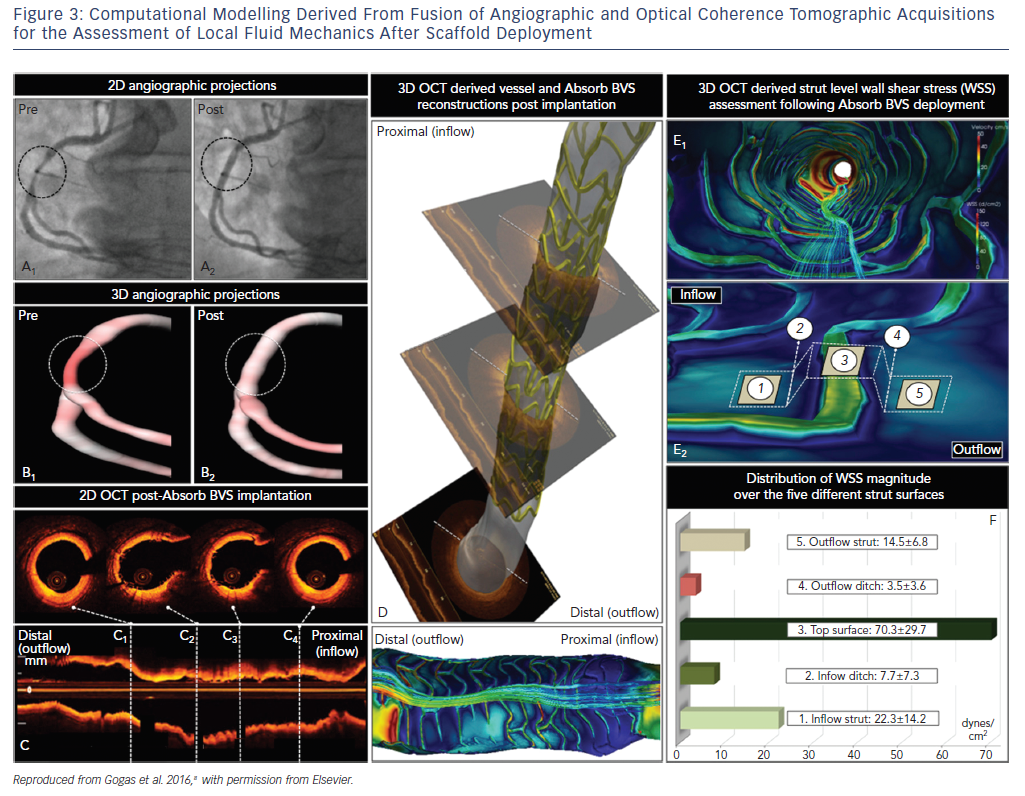

The bulky strut design of the first-generation scaffolds such as the ABSORB BVS induced solid- and fluid-mechanical alterations after deployment, leading to excessive mechanical loading over the arterial circumference and recirculation zones around the strut surface (Figure 3).8

Mechanical stretch is sensed by mechanoreceptors such as stretch-activated channels and integrins, enabling the activation of downstream signaling pathways, which promote inflammation, apoptosis and angiogenesis.9,10 Although mechanical loading over the arterial circumference is temporary and declines dramatically 6 months after BRS deployment as opposed to the permanent stretch induced by metallic stents, the combination of increased circumferential stress and low endothelial shear stress induced by the thicker struts triggered the development of greater neointimal hyperplasia and late lumen loss over time compared to contemporary metal DES.

Next generation scaffolds have been developed with thinner strut profiles in the range of ~90 μm and a circular rather than rectangular design, potentially eliminating rheological and mechanical issues, which could reduce the development of TLF and ScT.

Suboptimal Deployment Techniques

Optimal lesion preparation (P), correct scaffold sizing (S) and proper post-dilation (P) are essential for good clinical outcomes.

Suboptimal PSP techniques in the acute phase of scaffold deployment, which were not included in the manufacturer’s initial instructions, generated only modest strut embedment in the arterial wall, leading to strut underexpansion or malapposition.

The cumulative observations from the ABSORB trials clearly showed that BVS implantation in properly sized vessels was an independent predictor of freedom from: TLF for 1 year (HR 0.67; p=0.01) and for 3 years (HR 0.72; p=0.01); and ScT for 1 year (HR 0.36; p=0.004). Aggressive pre-dilatation was an independent predictor of freedom from ScT between 1 and 3 years (HR: 0.44; p=0.03), and optimal post-dilatation was an independent predictor of freedom from TLF between 1 and 3 years (HR: 0.55; p=0.05).11

Optical Coherence Tomographic Guidance

Optical coherence tomographic (OCT) guidance is essential for vessel sizing and assessment of novel modes of late and very late scaffold failure.

Accurate vessel sizing for BVS implantation can be performed only with OCT. Although experienced operators are able to correctly size the vessel by ‘eyeballing’, this approach is significantly subjective. In addition, quantitative coronary angiography consistently underestimates the actual reference vessel diameter (RVD) while intravascular ultrasound slightly overestimates vessel size. Although initial instructions by the manufacturer did not alert operators that they should avoid implantation of BRS in vessels with a RVD of <2.5 mm, it soon became evident that BVS deployment in small vessels led to a fourfold increase in the ScT rates. In the ABSORB III trial, BRS deployment in vessels with RVD <2.25 mm measured by quantitative coronary analysis resulted in increased rates of TLF (12.9 % versus 8.3 %) and device thrombosis (4.6 % versus 1.5 %) when compared to metallic DES.11

Although OCT-guided BVS implantation was performed in only a small percentage of cases in the ABSORB trials, future studies using next generation BRS are designed with mandatory OCT-guidance for scaffold deployment.

Recently, novel mechanisms of scaffold failure were described with OCT imaging at follow-up, such as scaffold discontinuity associated with late intraluminal scaffold dismantling. Other modes of failure similar to those associated with metallic stents involved malapposition, neoatherosclerosis and scaffold shrinkage which, in aggregate, offset the incremental benefits of using this new technology in interventional cardiology.

Future Perspectives

Improved scaffold designs will need to make implantation as user friendly as metal stent implantation, so that they can be inserted not only by the most experienced operators but also by less skilled operators, matching the high bar established by metal DES.

The reason to persevere is not because of any expectation that scaffolds can beat metal stents in the intermediate term, but the concern that metal stents may begin to produce significant adverse events many years after implantation. That will have to become a demonstrated reality rather than a hypothetical speculation for BRS to replace metal stents.

For the time being, although the ABSORB scaffold has gone, the authors believe that these disappearing technologies will eventually reappear in an improved form; questions remain over whether they will be competitive with current and future coronary stents.12